Google has finally revealed Gemini 2.5 Pro Experimental, the most recent development in its line of AI reasoning models. The model is now available on Google AI Studio and to Gemini Advanced customers, so it exceeds the limits of reasoning, memory, and multimodal performance.

Firstly, let's split apart what exactly makes Gemini 2 Pro a game-changer.

Part of Google's continuing work to create more "thinking" artificial intelligence models is Gemini 2.5 Pro. Combining an improved base model with more intelligent post-training methods, it builds on early incarnations like Gemini 2. 0 Flash Thinking.

Google DeepMind's CTO, Koray Kavukcuoglu, says that the approach combines "chain-of-thought prompting and reinforcement learning" to improve its reasoning capacity.

But how does this translate into actual performance in the world?

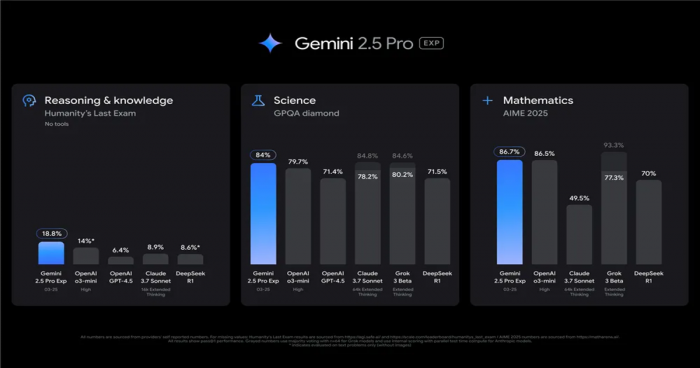

Across many metrics, Gemini 2.5 Pro has bested contemporaries, including Claude 3.7 Sonnet and OpenAI's o3-mini. It shows higher proficiency in:

This places it as a cognitive engine with practical value rather than only a language model.

And it improves even more as we investigate its multimodal features.

Naturally multimodal, Gemini 2.5 Pro is a model. It can understand and generate content across:

It also enables users to produce more context-aware applications by means of tool use, including image generation, code execution, and even dynamically accessing APIs.

The extent of the model is ready to grow greatly in related terms.

One of the biggest in the industry, Google has stated that Gemini 2.5 Pro will quickly provide a 2 million token context window. Whether it's a 100,000-word document, hours of video transcripts, or full-code repositories, this enables the model to handle huge quantities of data at once.

And now, this is how you may get started with it today.

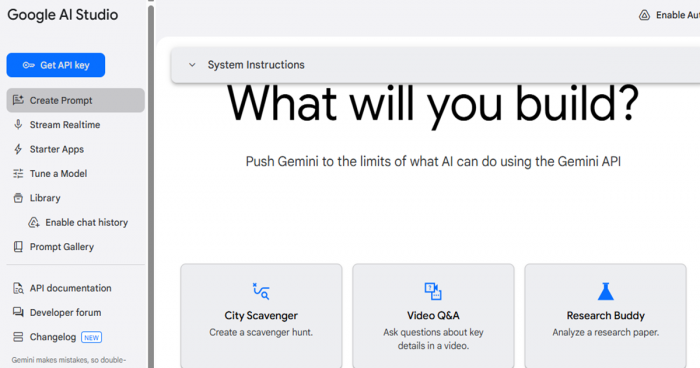

You can try Gemini 2.5 Pro Experimental via:

Google AI Studio (for developers and creators)

Gemini Advanced (with a paid subscription)

These systems provide real-world application integration of their services, coupled with practical access by which their features might be judged.

Let us finish with the grand scheme.

Using Gemini 2.5 Pro Experimental, Google is clearly vying with leading models from OpenAI and Anthropic. Its advanced logic, increased memory window, and broad multimodal capabilities set it front and center in next-gen AI.

Automated research tools, more intelligent virtual assistants, and more profound creative cooperation between people and machines will abound as models like this launch.

Be the first to post comment!